The conversation about the need for spatial intelligence is only just starting to get widespread attention. People are finally catching on to the fact that robots and AI will need to perceive the physical world to be able to interact with it.

In our previous post, we talked about AI copilots for physical work, a stepping stone to making the world accessible to AI. But every iteration of embodied AI, whether in a pair of smart glasses or a robot, will rely on a shared layer of spatial infrastructure: the real world web. A way for digital things to browse and search physical locations.

This is what the Auki Network is: a decentralized machine perception network for collaboratively accessing and editing 3D maps of the world, making the physical world accessible to AI.

In this article we are going to get into what the real world web is and how it works using our recent demos as examples.

So, what exactly is the real world web?

Think of it as the physical-world equivalent of the internet: a shared, decentralized layer overlaying physical spaces that devices, robots, and AI can “browse.” Just as websites are structured information for humans and machines online, the real world web structures spatial information in the physical world.

Auki domains, the fundamental building blocks of the Auki Network, are digital twins of physical spaces that can be accessed by smart phones, smart glasses and robots. Auki domains are to the real world web what websites are to the world wide web.

Why does this matter? As we outlined in our master plan, bringing AI into the real world will increase its total addressable market by 3x. But without common, open, and interoperable infrastructure, robots and AI will remain siloed. They’ll need to relearn the same spaces, require redundant rescanning, and fail to scale. The real world web removes that barrier.

And it’s not just for robots.

The magic lies in the fact that the technology works the same way whether the visitor is a humanoid robot, a pair of smart glasses, or a drone. They can position themselves within the same persistent digital twin of the real world, enabling coordination and interoperability.

That is what the real world web provides.

After four years of building this core technology, our latest demos speak for themselves: robots autonomously navigating new environments using the Auki Network.

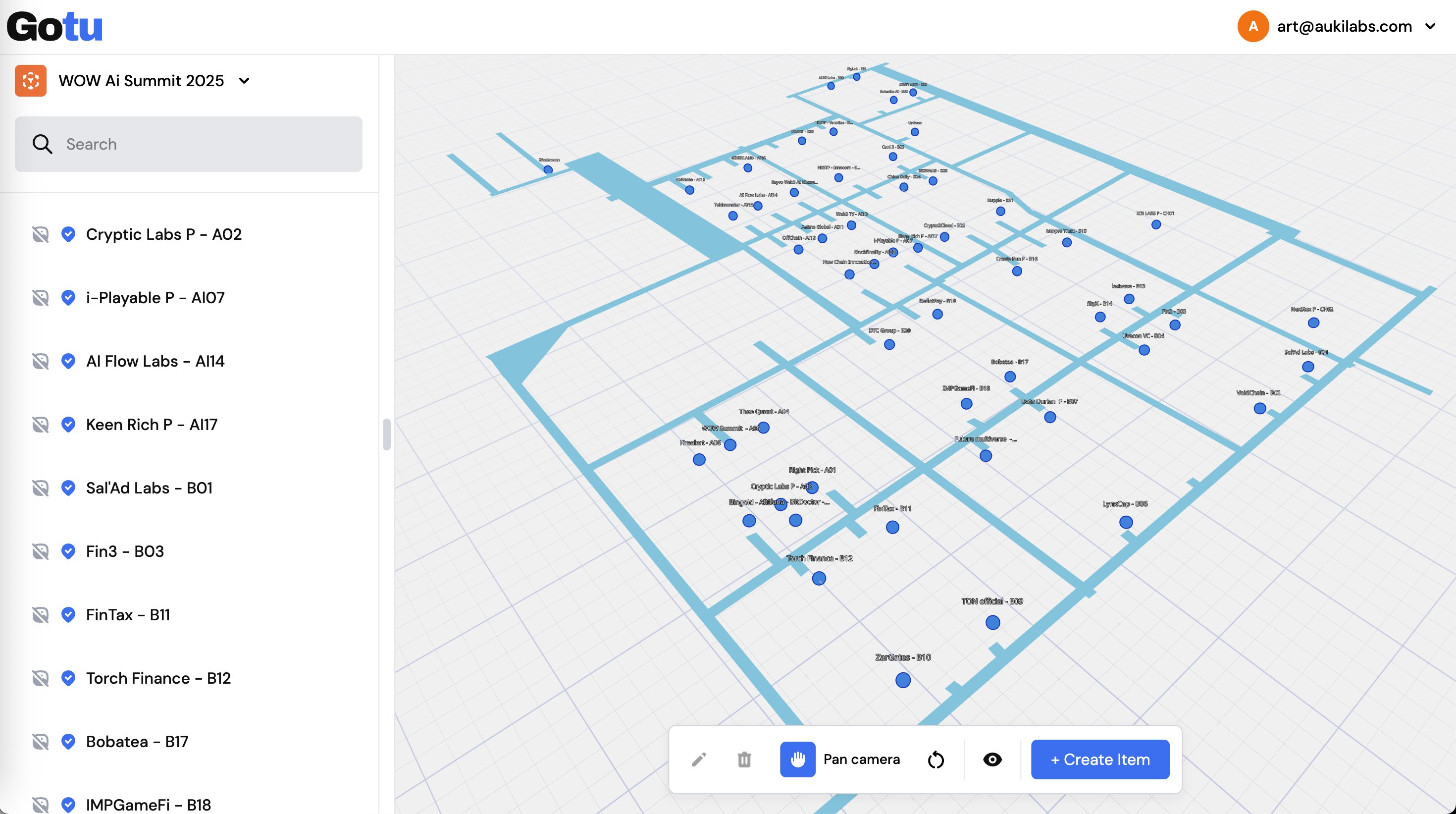

Last week our Unitree G1 humanoid, Terri, was at the WOW Summit showing off autonomous robot navigation using an Auki network domain.

If you aren’t overly familiar with robotics, it might not be immediately obvious why this is impressive, so let’s break it down.

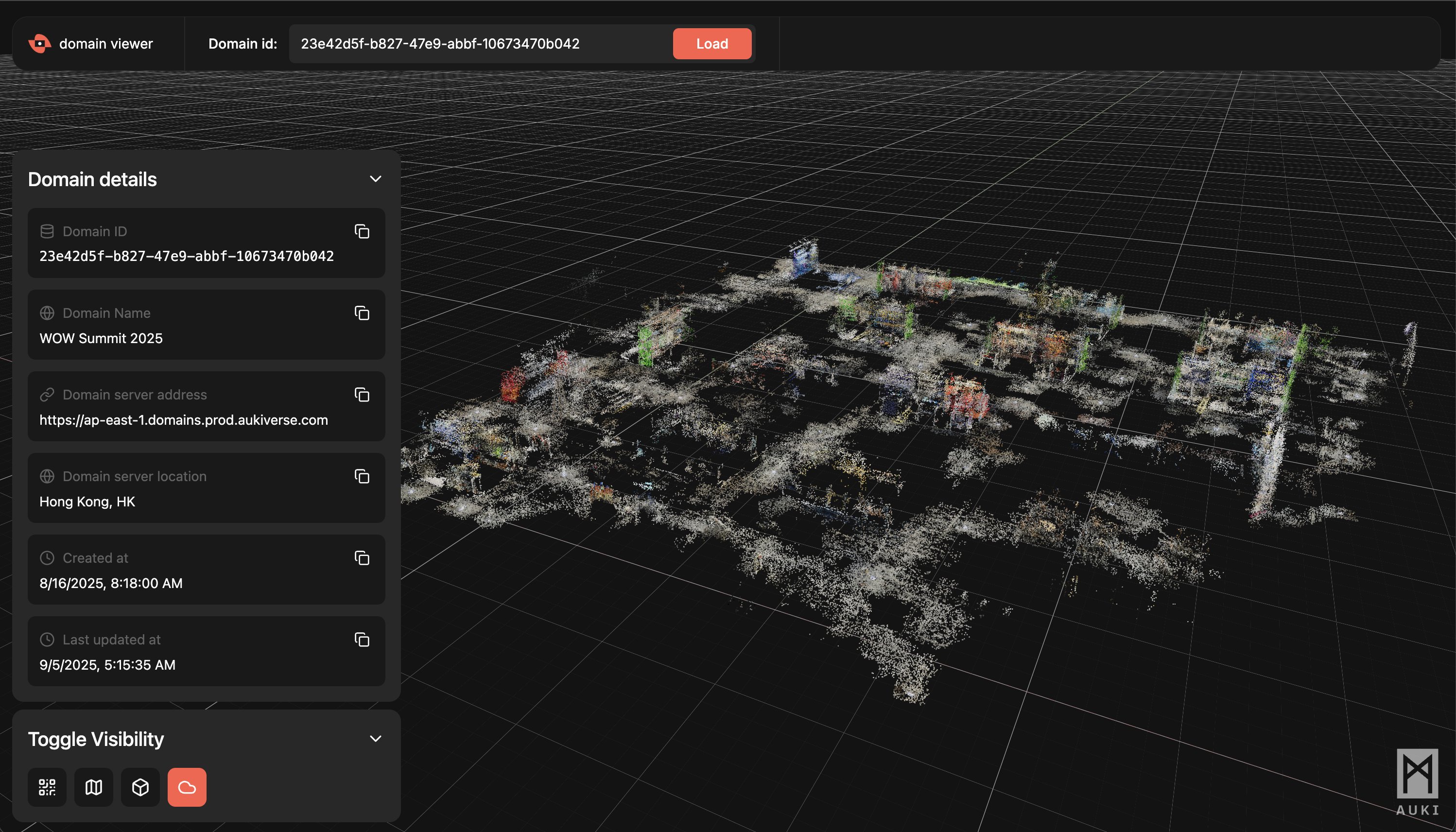

First, Terri is navigating using an Auki domain that was set up by the team the day before in a process that took a couple of hours.

The key here is that this “map” isn’t specifically designed for Terri. Terri is accessing the same map that human visitors will access when using our app-free AR navigation. Terri is navigating a new environment that has been pre-mapped but not mapped specifically for him. Most robots that can rely on a map require an environment to be pre-mapped for them using a proprietary, closed map.

[Screenshot of terri’s sensor data and auki domain]

Next, Terri is navigating autonomously using an Auki domain and his onboard sensors. This is possible because Auki domains not only contain relevant information such as navigable areas and obstacles, but also include route planning and optimization.

Terri’s onboard sensors do not “know” where a specific booth is. He is able to navigate to it because he can access information about the physical space in the domain data. In this case, a directory of points of interest corresponding to booth locations.

This builds on the earlier demo we did at our office demo space last week.

The bottom line is that Auki domains have a number of applications running on them including AR experiences, spatial analytics, and accessible navigation for the blind among many others. In our latest demos we have also shown how they can provide an external shared sense of space for robots and AI copilots.

We go into the intricacies of how Auki domains work in other videos and articles, but in brief, an Auki domain consists of a digital twin of a physical space including a universal coordinate system.

These domains are created by smart phones collaboratively capturing the necessary sensor data. These are then sent to a reconstruction server to be merged into the digital twin.

QR codes, also called portals, anchor the domain providing both an instant calibration points and points of entry for devices.

Devices visiting an Auki domain, like Terri, are able to instantly position themselves in 3D space to within a few centimeters just by viewing a QR code, even at a distance.

Portals are not the only way for devices to position themselves but are currently the fastest and most scalable solution. In a coming article about how smart glasses work with Auki domains we will go into those alternative methods in more detail.

We’re continuing to build out the infrastructure supporting the real world web. The reconstruction of the WOW domain was processed on community nodes: the Auki Network distributed the sensor data to a network of consumer GPUs that translated the data into a 3D map.

Beyond that, we’re thinking of what’s coming next: an App Store for the real world. Just as the internet enabled a developer ecosystem of websites and apps, the real world web will unlock a creator economy for physical AI implementations. Developers and builders will be able to create AI copilots, robot applications, and other spatially-aware applications that plug directly into this shared infrastructure.

Stay tuned for some exciting news on that front soon.

The real world web is necessary infrastructure for the AI and robot revolution.

However, ultimately no single robotics or AI focused company is best placed to build it. It can’t be siloed or closed. Any solution that isn’t open and interoperable will be dead in the water.

At Auki, we have been building our vision of the real world web for years. Our latest demos showcase what this technology is capable of and offer a glimpse of a future where robots and AI copilots collaborate with humans in shared spaces.

Auki is making the physical world accessible to AI by building the real world web: away for robots and digital devices like smart glasses and phones to browse, navigate, and search physical locations.

70% of the world economy is still tied to physical locations and labor, so making the physical world accessible to AI represents a 3X increase in the TAM of AI in general. Auki's goal is to become the decentralized nervous system of AI in the physical world, providing collaborative spatial reasoning for the next 100bn devices on Earth and beyond.

X | Discord | LinkedIn | YouTube | Whitepaper | aukilabs.com