Apple’s Double Tap Is The Gateway To Mainstream Spatial Computing Adoption

Yesterday, at the anticipated Apple iPhone 15 launch event, something remarkable was revealed, and it’s not just about the USB-C or the latest gadgets like the Apple Watch Series 9 and Ultra 2.

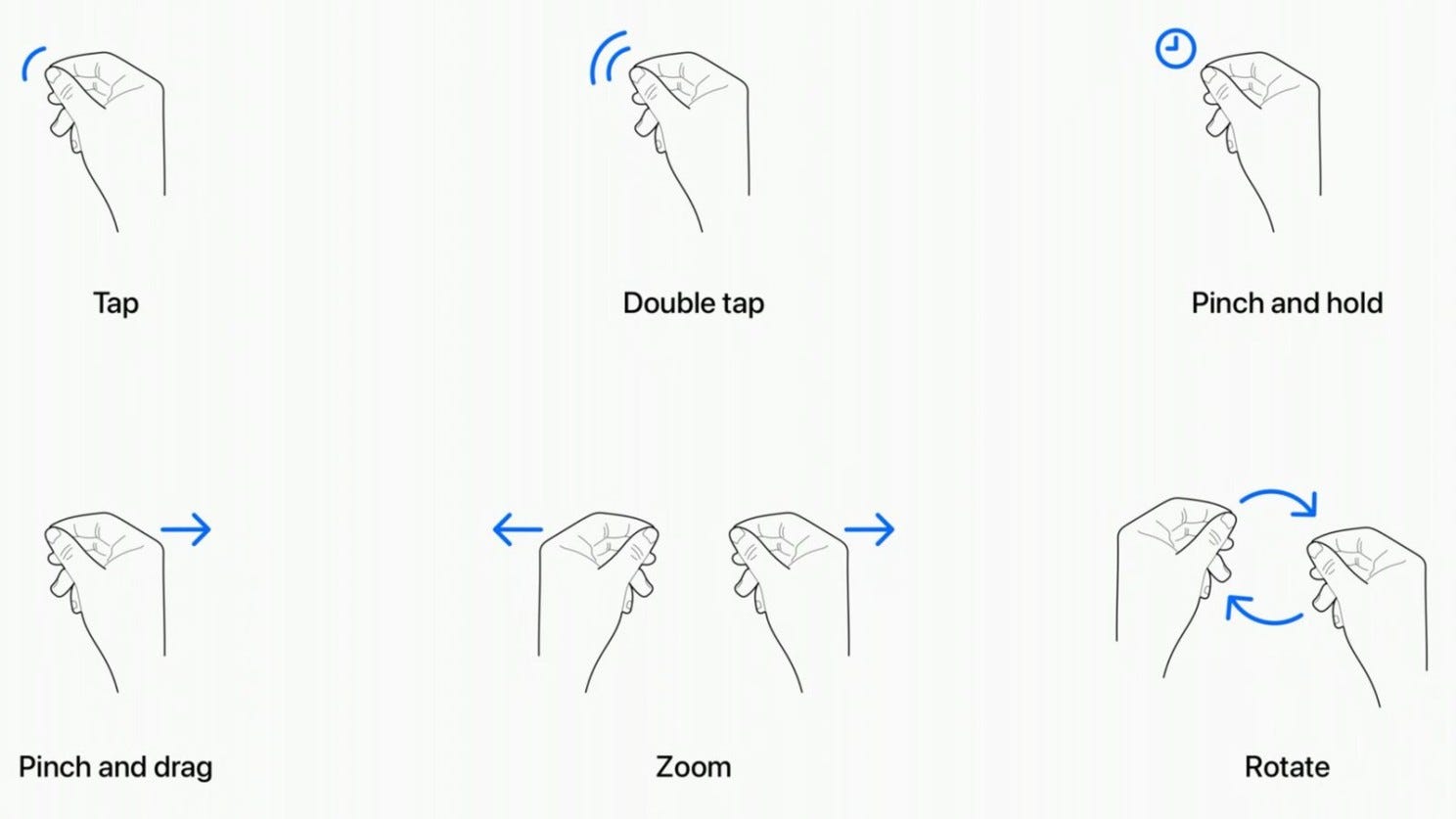

It’s about a groundbreaking feature known as “Double Tap.” Imagine, with a simple pinch of your fingers in thin air, you gain control over your Apple Watch screen without touching it.

Double Tap will allow users to tap their index finger and thumb together twice, to answer or end phone calls, play and pause music, or snooze alarms. The hand gesture can also scroll through widgets, much like turning the digital crown.

It’s not just a fun gimmick; it’s the future, and here’s why it’s all about Apple Vision Pro.

For years, the world of virtual reality has grappled with a significant challenge: how to control it effectively. While other industry giants relied on handheld controllers with traditional buttons, Apple’s Vision Pro headset took a revolutionary path earlier this year by using external cameras to track the motion of your hands directly.

Now, with the introduction of the Double Tap feature on the Apple Series 9 and Apple Watch Ultra 2, Apple is pushing the boundaries of using hand movements as input. While the exact mechanics are unclear, I assume the watch’s accelerometer is intricately involved in processing the nuanced movements of your wrist and hands.

Why is this groundbreaking?

- Apple is exploring a new way to use the movement of your hands as input. It ignites innovation among developers, encouraging them to incorporate hands-free pinch gestures into their apps well before the official launch of the Vision Pro, regardless of whether their apps will be related to Apple’s spatial computer.

- Frontloading the user — It’s an opportunity for us as users to adapt and learn. By the time the Apple Vision Pro reaches store shelves, anyone with an up-to-date Apple Watch will already be adept at navigating devices with their bare hands. This familiarity will make the Vision Pro feel intuitive and natural, while other devices still reliant on controllers may seem outdated and alien.

- It provides Apple with a wealth of data on how people utilize such gestures and how they function physiologically, giving them an advantage in a whole new field of UI & UX — gestural design and spatial interfaces to parse by observing user behavior.

Will Double Tap extend to the iPhone and MacBook, allowing Apple Watch users to seamlessly integrate their wrist-computer with all other Apple devices?

I wouldn’t put it past them.

Love it or hate it, Apple’s long-term vision for spatial computing is nothing short of transformative; it’s about the internet materializing in our physical space, allowing us to look through an Apple product rather than just at it.

This profound shift marks a new era in human-computer interaction and our perception of the digital world, and we must recognize that spatial computing is not a mere technological upgrade but a paradigm shift. It’s a shift that challenges us to rethink how we interact with technology and perceive our digital surroundings.

We are on the cusp of a new era where the digital and physical seamlessly converge, opening up endless possibilities for creativity and human connection.

— Damir First, Head of Communications

About Auki Labs

Auki Labs is at the forefront of spatial computing, pioneering the convergence of the digital and physical to give people and their devices a shared understanding of space for seamless collaboration.

With a focus on user-centric design and privacy, Auki Labs empowers industries and individuals to embrace the transformative potential of spatial computing, enhancing productivity, engagement, and human connection.

Auki Labs is building the posemesh, a decentralized spatial computing protocol for AR, the metaverse, and smart cities.

Twitter | Discord | LinkedIn | Medium | Youtube | Posemesh.org